A workflow is made up of an orchestrated and repeatable pattern of activity. These activities are enabled by resources that are systematically organized into processes. These processes transform materials, provide services, or process information. A workflow can be depicted as a sequence of operations, the work of a person or group, the work of an organization of staff, or one or more simple or complex mechanisms.

In computing, a pipeline (also known as a data pipeline) is a connected series of data processing elements, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in a time-sliced way.

Workflow Types

There are several different workflow types. Data plays a crucial role in product development and management. Companies and projects are continuously becoming more data-driven, adopting data-centric processes that combine different systems for product development and data analysis. These data-oriented workflows implement the data pipelines.

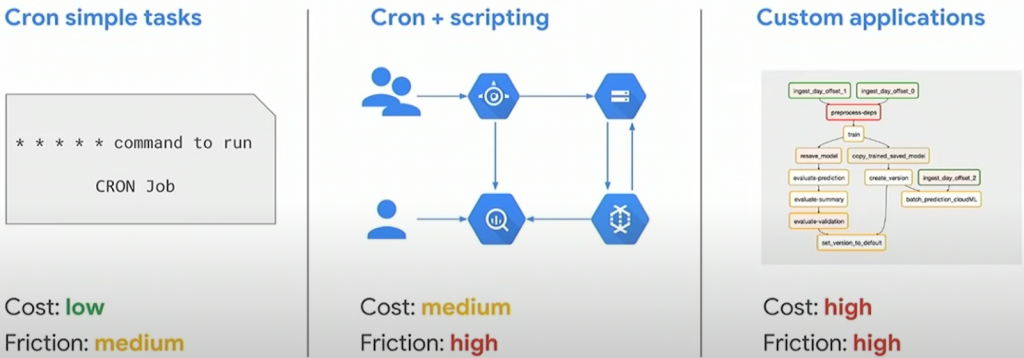

There are many different ways to implement a workflow. They commonly range from simple cron jobs to workflows implemented in custom applications.

Flexible, Easy Data Pipelines on Google Cloud with Cloud Composer (Cloud Next ’18)https://youtu.be/GeNFEtt-D4k

The common approaches do not generalize, and developers focus on building the workflow engines, data pipelines, data tasks, and business rules for each workflow implementation. A workflow manager must support easy creation, scheduling, and workflow monitoring so that the developers can focus on delivering value through the data pipeline rather than building workflow engines.

What is a Data Pipeline?

The workflow manager facilitates the creation and scheduling of a data pipeline.

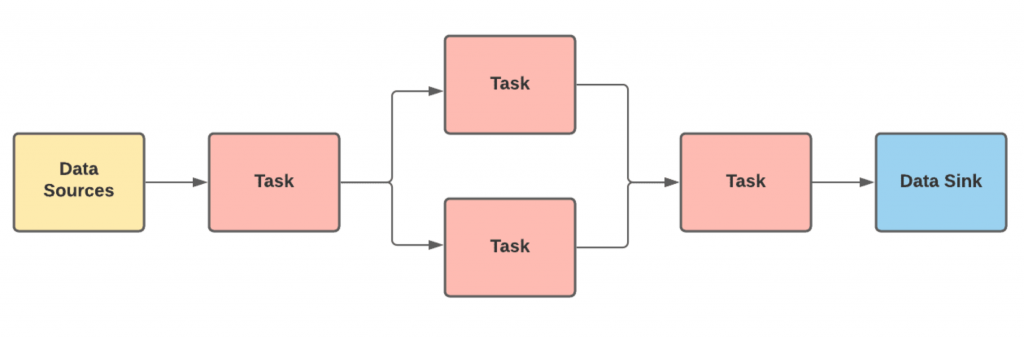

A data pipeline is a chronological execution of an individual task in sequence or parallel, with data coming from different data sources and data transformation, ultimately loading the data into sinks that support business operations.

Data Pipeline Example

As you can see in the example above, a data pipeline is made up of tasks, and could be composed of many tasks intertwined with dependencies.

This means having a task management strategy is essential. Task management is mainly concerned with task dependency and task order of execution. Graph theory addresses both concerns and is an efficient way to manage tasks with the help of Directed Acyclic Graph (DAG).

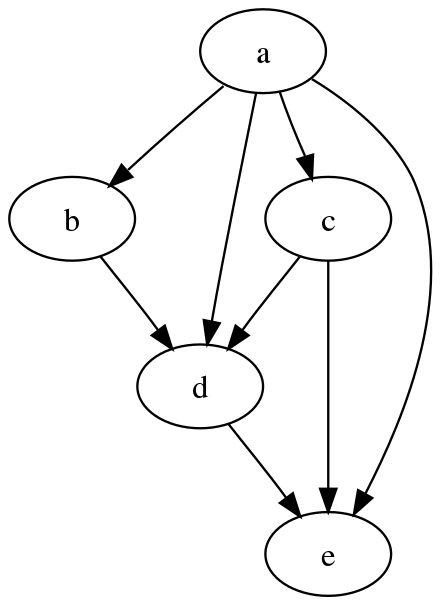

What is DAG?

In mathematics, particularly graph theory and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That means it consists of vertices and edges (also called arcs), with each edge directed from one vertex to another, such that following those directions will never form a closed loop.

A directed graph is a DAG if (and only if) it can be topologically ordered by arranging the vertices in a linear order consistent with all edge directions. DAGs have many scientific and computational applications, rangin犀利士 g from biology (evolution, family trees, epidemiology) to sociology (citation networks) to computation (scheduling).

Example of a DAG – https://upload.wikimedia.org/wikipedia/commons/5/51/Tred-G.png

Using DAGs in a data pipeline to manage tasks gives us a theoretical and practical methodology and approach to deal with task dependencies and order of execution. DAG task management is a fundamental and necessary feature of a workflow manager.

Workflow Manager

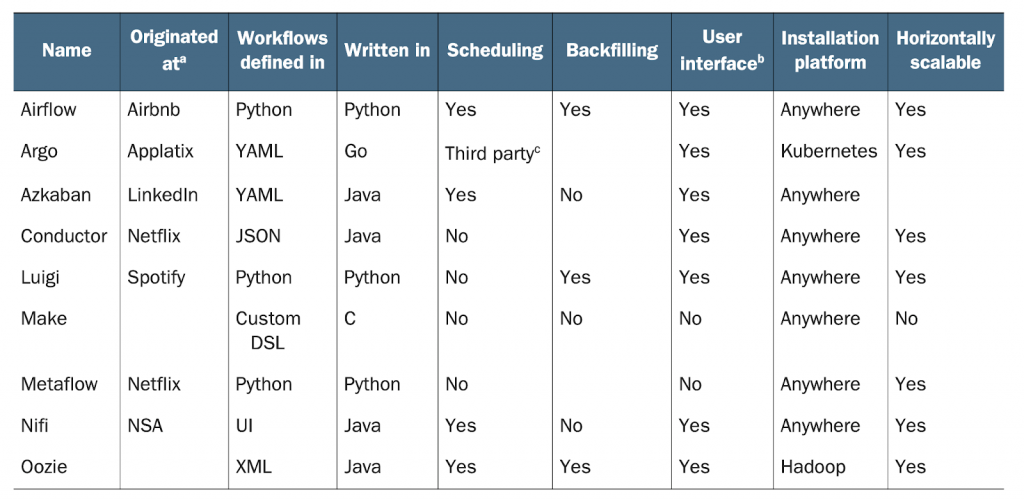

In our current cloud native ecosystem, there are several workflow managers with different features. Translucent Computing uses a common selection pattern to select an appropriate tool for cloud native environments. We look at a tool’s maturity to choose one that marries the theoretical and practical. This is why we use Apache Airflow, an open-source workflow manager that supports workflow creation, scheduling, and monitoring with support for DAGs.

There’s more in our pipeline when it comes to pipeline content, so stay tuned for more!

November 22nd, 2021

by Patryk Golabek in Technology

See more:

December 10th, 2021

Cloud Composer – Terraform Deployment by Patryk Golabek in Data-Driven, Technology

December 2nd, 2021

Provision Kubernetes: Securing Virtual MachinesAugust 6th, 2023

The Critical Need for Application Modernization in SMEs