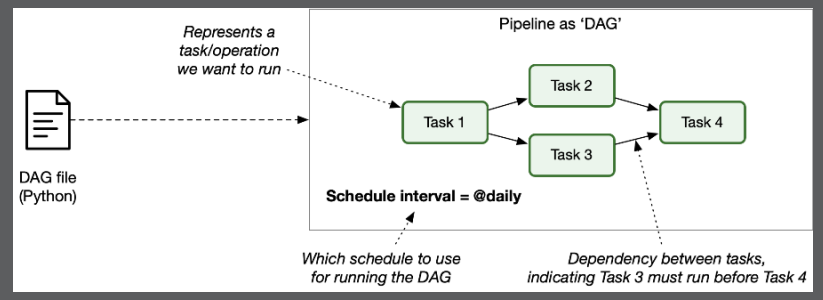

Apache Airflow(AA) is an open-source workflow manager tool designed to create, schedule, and monitor workflows. AA allows defining workflow as code using Python. The data pipelines or workflows are defined as Direct Acyclic Graphs (DAGs) of tasks. A DAG represents a task order of execution and the dependencies between tasks.

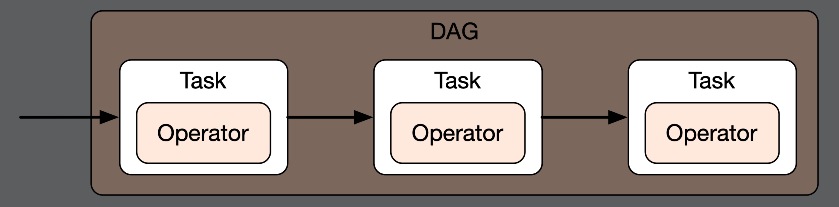

A task is a piece of work that a data pipeline wants to do, like a SQL query to load data. The piece of work in a task is implemented via operators. The task manages the state of the operator.

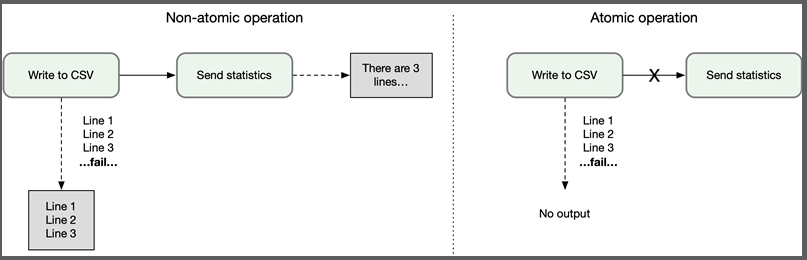

Taking lessons from transactional database systems, tasks should be atomic and idempotent. Atomic tasks ensure that everything or nothing completes in a task, no half work is produced. Idempotent tasks have no additional effects. Executing the same task multiple times with the same inputs should not change the overall output.

AA comes with several special operators defined for a specific task, including bash, SQL, and email. Additionally, AA provides sensors that are special types of operators that continuously poll for a given condition to be true. Continuous polling can check for the existence of files or inspect a database for specific records.

Airflow Architecture

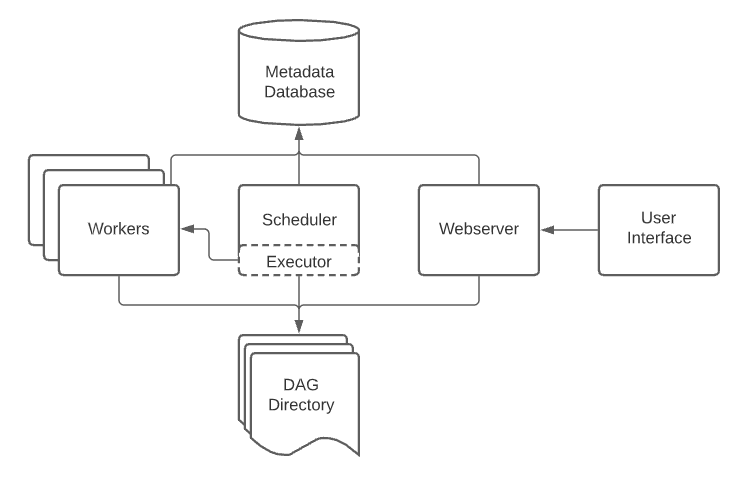

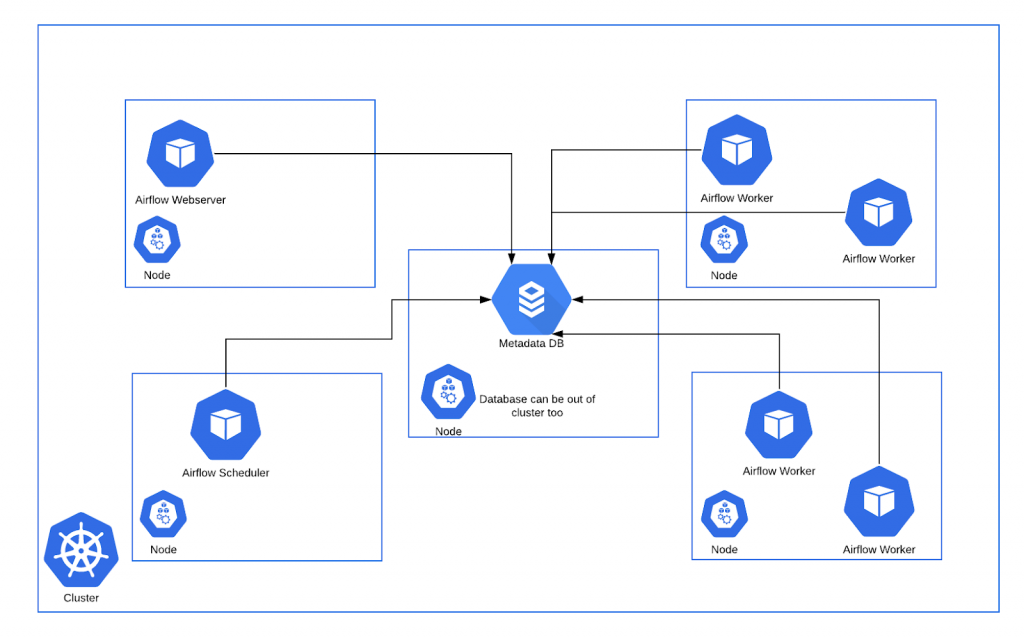

The heart of the AA architecture is the scheduler. The scheduler determines when and how the workflow is executed. The scheduler triggers the workflows retrieved from the DAG directory and submits them to executors. The executors push the task execution to workers. The webserver allows users to inspect, debug and trigger workflows via UI. A metadata database is provided to manage the state in AA and support the scheduler, executor, and webserver.

Kubernetes

At Translucent Computing, we engineer cloud-native systems with Kubernetes as the core. AA provides the Kubernetes executor to push task execution to the Kubernetes cluster. The Kubernetes cluster can dynamically and elastically respond to the demands of the workers. Furthermore, at Translucent, we deploy AA into the Kubernetes cluster, as in the diagram above. Using the Kubernetes cluster to support AA and worker deployment simplifies the DevOps CI/CD pipeline and allows DataOps to manage workflows effectively.

December 3rd, 2021

by Patryk Golabek in Technology

See more:

December 10th, 2021

Cloud Composer – Terraform Deployment by Patryk Golabek in Data-Driven, Technology

December 2nd, 2021

Provision Kubernetes: Securing Virtual MachinesAugust 6th, 2023

The Critical Need for Application Modernization in SMEs